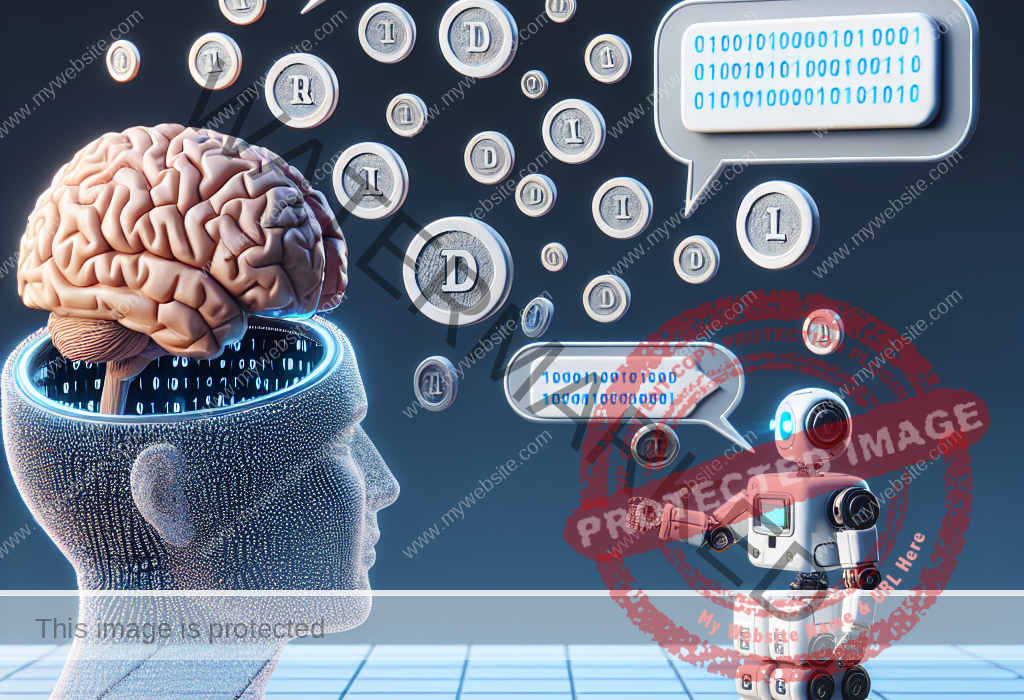

Understanding Tokenization and its Influence on AI in Learning and Development

Reading Time: 2 minutesTokenization is a critical concept for eLearning developers to grasp, particularly in the realm of artificial intelligence (AI). Essentially, tokenization involves breaking down text into smaller units, known as tokens, which allow AI systems to analyze language more effectively. This process enables AI to understand context and meaning, enhancing its overall performance.